This insightful four-part series by CKGSB Professor Chen Long examines how DeepSeek, a trailblazer in Chinese-style AI, is redefining the economics of AI and scaling laws while setting new global benchmarks. From its unprecedented surge in daily active users to groundbreaking technological advancements and strategic execution, DeepSeek presents a compelling case study on the future of AI.

The series includes four parts:

PART 1|The arrival of a new global AI model with the highest daily active users

PART 2|What has DeepSeek achieved?

PART 3|How did DeepSeek accomplish this?

PART 4|What does the “DeepSeek Phenomenon” imply?

Stay tuned as we uncover how DeepSeek’s innovations are redefining the AI industry and shaping its global trajectory.

PART 4 | What does the DeepSeek phenomenon imply?

01 | AI Economics and Scaling Law Defined by the ChatGPT Phenomenon

If we limit our understanding of the DeepSeek phenomenon to the success of individual companies, we miss the broader implications for AI economics. AI economics refers to the ways in which AI, as a technological force, influences the fundamental laws of the economy and business.

Altman outlines three layers of AI economics: “Technology → Scaling Law → Value Creation.” These three layers are important, but a missing key element is how the investment threshold in technology determines how many players can participate, and whether this is an oligopolistic or inclusive commercial model.

In short, the first stage of the intelligent revolution, established by OpenAI, is characterized by U.S.-led oligopolistic business models. The investment requirements meant that only a few players could implement the scaling law, with innovation costs being the key factor in determining the industry structure. DeepSeek’s breakthrough will change this path, greatly promoting AI’s accessibility.

Due to the significant decrease in innovation costs, the previously oligopolistic scaling law will transform into an inclusive scaling law. The former oligopolistic AI business will become an inclusive AI business.

From this perspective, DeepSeek’s breakthrough changes both AI economics and the scaling law.

Now, let’s take a look at the AI economics and the scaling law behind the ChatGPT phenomenon.

The past few years, dominated by the ChatGPT phenomenon, have seen massive investment in AI, reserve computing power, and its combination with scaling law. This, as Altman mentioned in the first two rules, is often referred to as “miracles through brute force” and considered the only path to Artificial General Intelligence (AGI).

The reason computing power is so crucial, as Richard Sutton, the father of reinforcement learning, reflected in his 2019 classic article Bitter Lessons, is that: “In the long run, computing power is the true deciding factor. History has repeatedly taught us that AI researchers often try to inject human knowledge into AI algorithms, which usually works in the short term and brings personal achievements and vanity. But in the long term, it creates bottlenecks and even hinders further development. Ultimately, breakthroughs often come from a radically different approach — expanding the scale of computing power through search and learning. The eventual successes are often bitter and hard to swallow, because the success of computing power is a loud slap in the face of our human-centered thinking and vanity.”

At the enterprise level, the arms race for computing power is a prerequisite for joining the game. This has created a scenario where the most advanced AI is often a game played by only a few players. A typical example of this is the “Seven Sisters” tech companies in the U.S. These companies are the main carriers and beneficiaries of the three rules Altman mentioned. Specifically:

- In computing power reserves, for instance, Nvidia’s H100 purchases account for over 90% of the procurement by the Seven Sisters and their related companies.

- In investments, the capital expenditure plans of 10 tech companies, including the Seven Sisters, in 2025 will be equivalent to the total expenditure of the Apollo moon landing program in the same year.

- In AI applications, the Seven Sisters are also the most advanced, whether by integrating their existing business with AI or by investing in and acquiring leading AI startups. In the first half of 2024 alone, the Seven Sisters invested $24.8 billion into the AI industry, more than the total venture capital investment in the UK in a year. These investments cover areas such as AI chips, large models, humanoid robots, autonomous driving, AI healthcare, and more.

- In terms of market value, the Seven Sisters accounted for more than 50% of the S&P 500’s growth in 2024, and their combined market value now accounts for over a third of the S&P 500’s total value, even approaching China’s entire GDP.

As a result, in an October 2024 interview, Carson Block, the founder of the famous hedge fund Muddy Waters, said: “Don’t think too much, just close your eyes and buy the Seven Sisters of the U.S. stock market, and you will make a profit.”

Thus, over the past two years dominated by the ChatGPT phenomenon, the main players described by the three rules Altman mentioned were large U.S. tech companies with abundant chips, large model, cloud computing, and digital application scenarios. This has a clear geographical and oligopolistic characteristic.

This characteristic is so pronounced that the U.S. government believes that technology must be developed by U.S. companies across the major links of the AI value chain, slowly penetrating into the outside world. Based on this, the U.S. government issued the U.S. AI Diffusion Framework in January 2025, aiming to control the pace and extent of AI technology penetration in different regions and countries through control over chips and large models.

On the second day of the Trump administration, under the leadership of the U.S. government, OpenAI, SoftBank’s Masayoshi Son, and Oracle formed the “Star Gate” plan, one of whose goals is to invest $500 billion within four years to provide computational power for OpenAI, continuing to “make miracles through brute force” in pursuit of U.S. dominance in AGI.

02 | The DeepSeek Phenomenon: How Does It Change AI Economics and Scaling Laws?

The DeepSeek phenomenon is set to transform AI economics, including scaling laws, and will change the industry landscape defined by the ChatGPT phenomenon.

While Altman’s three laws of AI seem to have “no reason to stop” , the players involved in these laws will undergo profound changes.

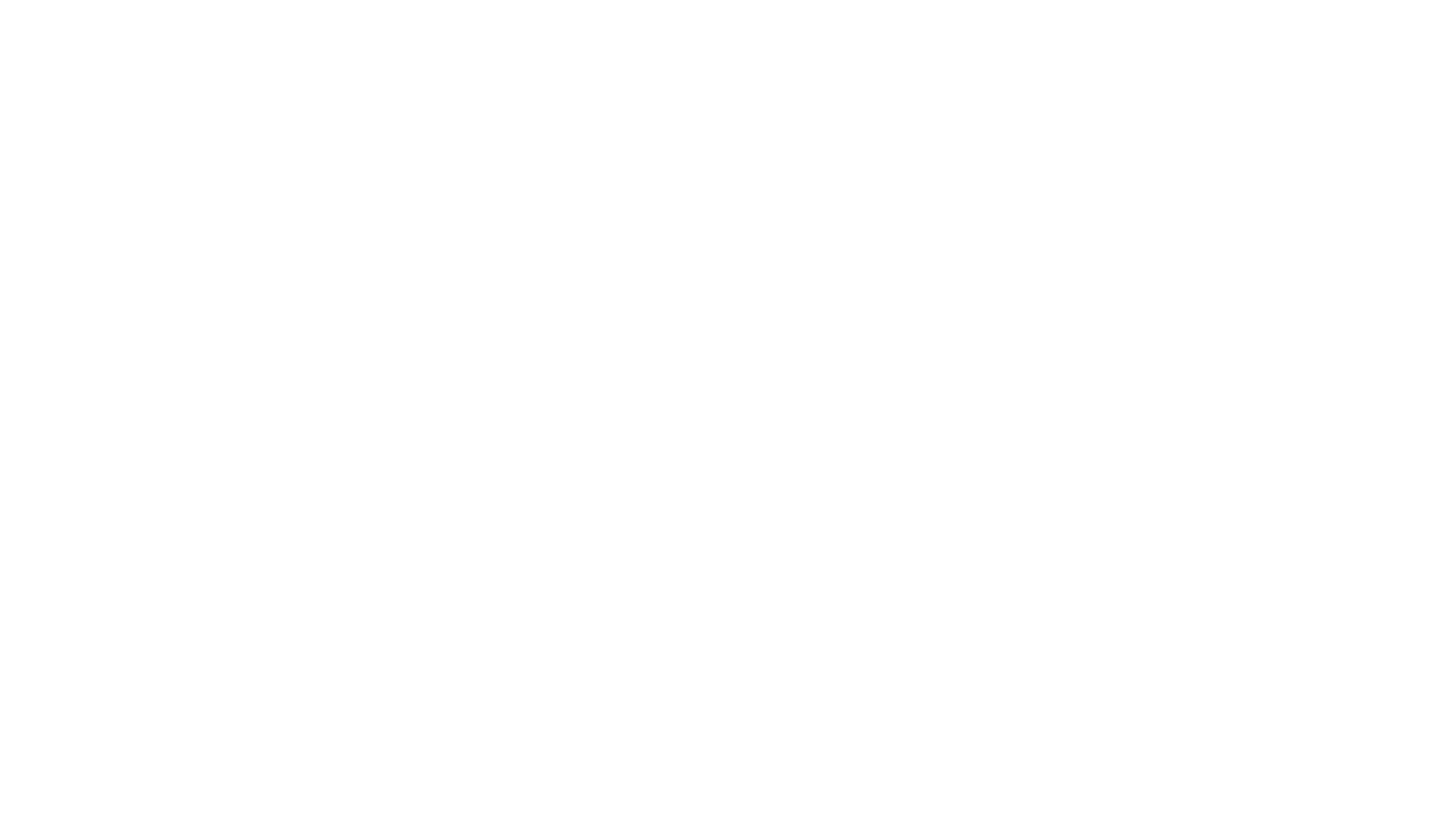

Among the three laws, the second, the strength of scaling laws, is crucial for the development of the AI industry. Shortly after the release of OpenAI’s o1 model, Jensen Huang, during Nvidia’s Q3 2024 earnings call, pointed out: “The law of pretraining scaling is not over, and more importantly, we’ve discovered two other scaling laws: one is the scaling law of post-training, and the other is the scaling law of inference time. Combined, we are actually facing three scaling laws simultaneously, so our infrastructure demands are truly massive.”

At its core, the scaling law highlights the rate at which costs decrease, meaning that the expansion of demand is driven by cost reduction. This is the key to why the DeepSeek phenomenon is important: whoever can reduce costs the fastest and by the largest margin will gain demand and become a competitive player.

The new wave of AI players from China, exemplified by DeepSeek, has already changed the cost structure of AI services through a combination of top-tier model capabilities and the lowest costs, thereby altering the player landscape in the AI industry. As AI evolves, companies that can blend engineering innovation with AI capabilities— regardless of whether they are traditionally tech giants—can now be players in the AI industry.

After DeepSeek gained popularity, many have revisited an old economic concept: the “Jevons Paradox.” This signifies that when the efficiency of using a resource improves, the overall consumption of that resource often increases rather than decreases. When this concept was first proposed in 1865, it was used to explain why, despite increased coal efficiency, coal consumption grew at a faster rate. After DeepSeek became popular, Microsoft’s CEO Satya Nadella was one of the first to reference this concept, suggesting that more efficient algorithm engineering, as exemplified by DeepSeek, truly unlocks more scenarios and applications, thereby driving overall computational demand growth.

This is certainly true, but what hasn’t been discussed is how the pool of potential players has greatly changed.

In addition to the transparency of technical paths and the significant cost reduction, the third factor contributing to the alteration of scaling law is the open-source model.

DeepSeek provides a more open open-source model than Llama, which has been widely praised in the industry. Marc Andreessen, the renowned Silicon Valley venture capitalist and co-founder of a16z, described DeepSeek-R1 as “a profound gift to the world.” Nathan Lambert, a research scientist at the Allen Institute for AI, pointed out, “DeepSeek is one of the most open models in the cutting-edge field, and they have done an outstanding job in spreading AI knowledge. Their papers are very detailed and, for teams around the world, also very practical in improving training techniques. The DeepSeek-R1 model uses a very permissive MIT license. This means there are no downstream restrictions, and it can be used for commercial purposes, with no use-case restrictions. You can create synthetic data using the model’s outputs… [What you’ll see] is that the true spirit of open-source is about sharing knowledge and driving innovation.”

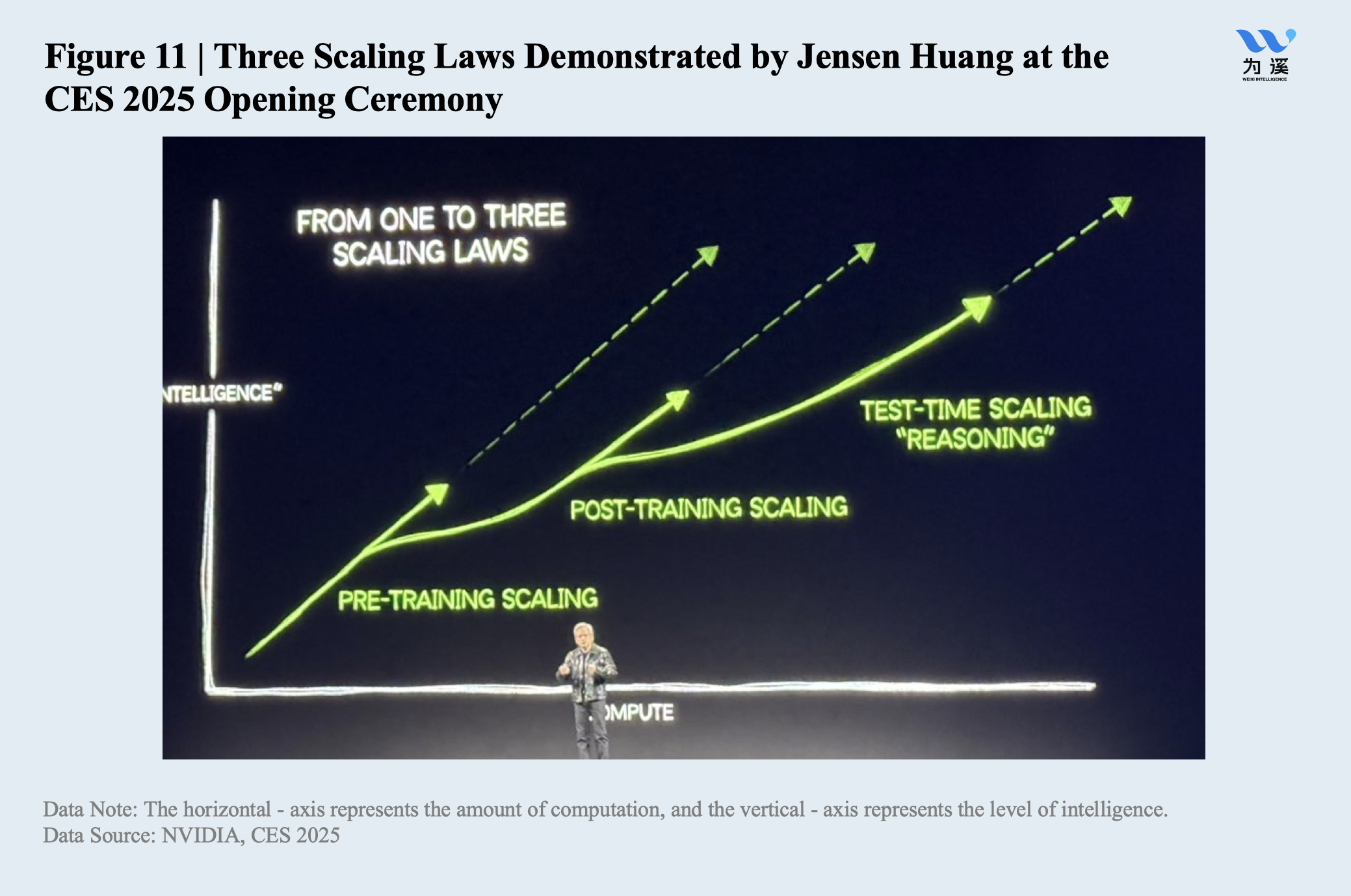

The open-sharing model has attracted a growing number of players. As of January 28, just a few days from its launch, at least 670 models built using DeepSeek-R1 had appeared on the HuggingFace community, with a total of over 3.2 million downloads, growing at a rate of about 30% per day. DeepSeek-R1’s downloads exceeded 700,000, growing at a rate of about 40% per day. As shown by the yellow curve on the right side of Figure 12, DeepSeek’s popularity (likes) is almost growing vertically by 90 degrees, surpassing Llama, and by February 6, it had reached second place.

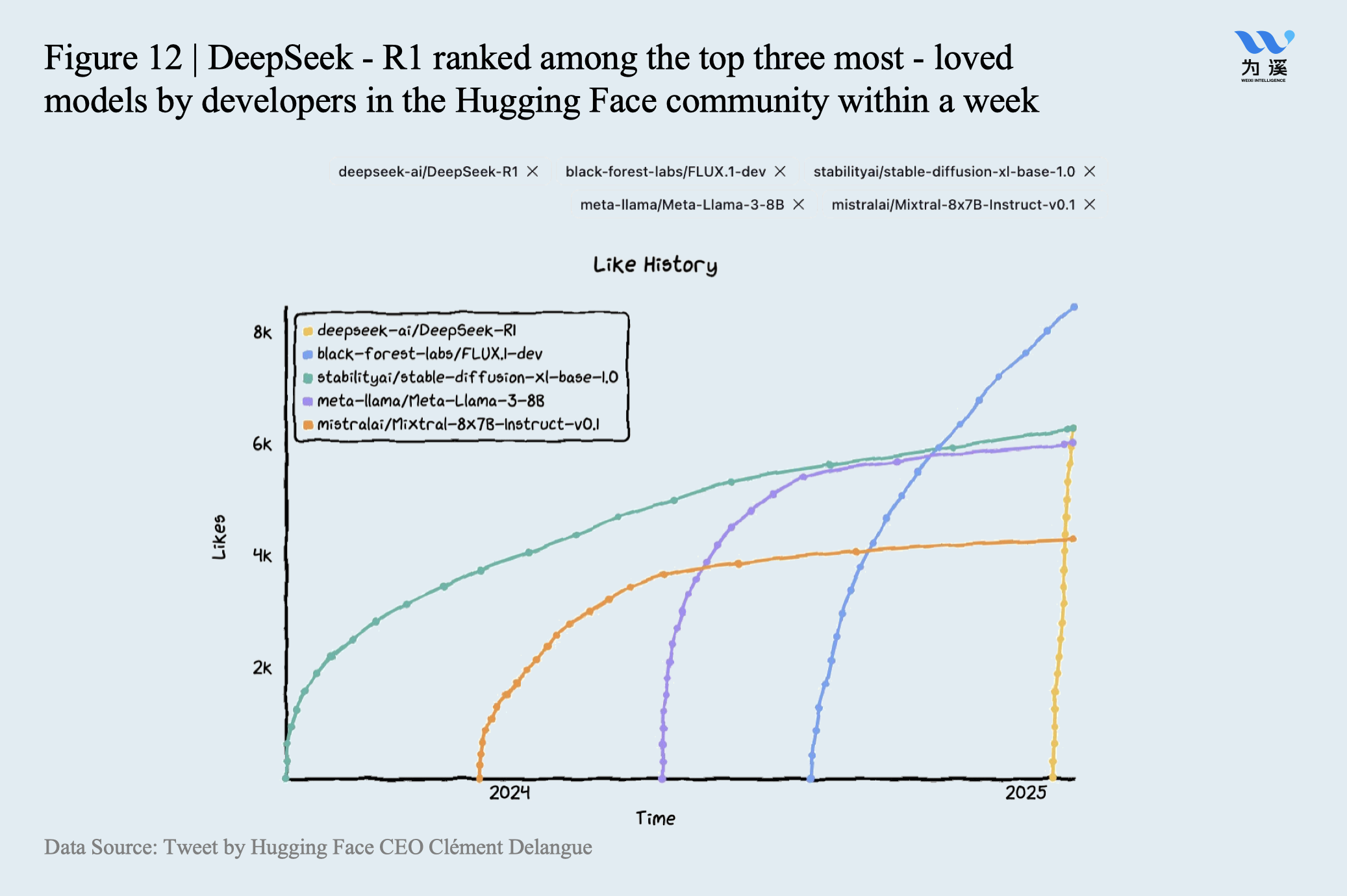

Before DeepSeek, Qwen, from China, was another example of a more thorough open-source model. In the HuggingFace community, the number of derivative models based on Qwen has surpassed 96,000, ranking first globally, even surpassing Llama. Additionally, all the top ten open-source large models in the community are derivative models based on Qwen open-source models for secondary training (Figure 13).

On January 31, 2025, during an online AMA (Ask Me Anything) on Reddit, OpenAI CEO Sam Altman admitted for the first time, “I personally think that we are on the wrong side of history here and need to come up with a different open-source strategy.”

03 | The Impact of DeepSeek on AI Economics and Scaling Laws

To summarize, DeepSeek represents a pivotal shift in the evolution of AI. It has fundamentally altered both AI economics and scaling laws.

Through transparent and accessible technical roadmaps, significant reductions in model development and usage costs driven by innovation, and a more thorough open-source model, Chinese AI players like DeepSeek and Qwen have shifted the oligopolistic scaling law, originally dominated by large U.S. tech companies, to a more inclusive scaling law. This transition means that the players in the AI industry are no longer confined to U.S. tech giants and industry leaders, but have extended to more players across the world. The proliferation of AI applications will accelerate globally.

The impact on cost and pricing signals that a new inclusive scaling law is already taking effect.

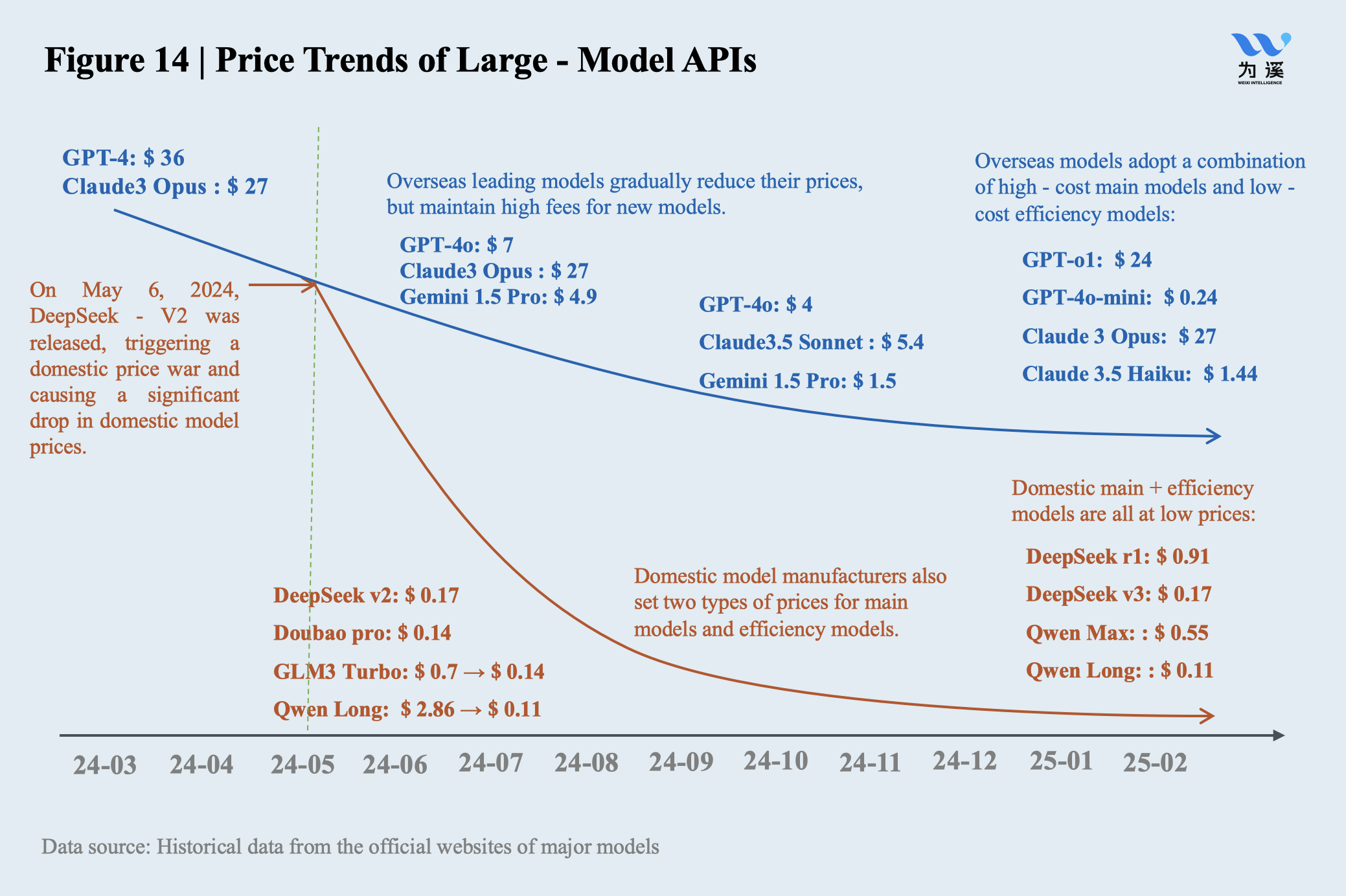

As a validation of this core conclusion, we will briefly discuss the sharp decline in AI usage prices, which is a concrete manifestation of the scaling law and is clearly being driven by Chinese enterprises.

A little-known fact is that, as shown in Figure 14, in May 2024, the large model API price war in Mainland China was actually initiated by DeepSeek. At that time, the DeepSeek-v2 model, already utilizing several engineering optimization techniques like MLA and MoE, was able to achieve profitability at extremely low prices. The result was that domestic large enterprises and AI startups were forced to follow suit. By February 2025, the usage prices for large models in China were already significantly lower than in the U.S. As companies like OpenAI, representative of U.S. large model enterprises, continue to lower application prices, this trend will accelerate as Chinese large model enterprises expand internationally.

Looking ahead, DeepSeek’s transparent sharing and free open-source models promise to improve model efficiency globally, accelerating the real-world application of AI across various sectors. In the coming months, large inference models will be rapidly democratized worldwide alongside foundational models, thus accelerating the real-world application of AI technology in both professional and everyday contexts.

Conclusion

AI technology reshapes the world through breakthrough phenomena. Two years after the release of ChatGPT, DeepSeek has emerged as the second groundbreaking product. Understanding the dynamics behind these phenomena is essential.

In this analysis, we draw three key insights:

- DeepSeek will soon surpass ChatGPT to become the world’s most active large model. Contrary to past expectations, Chinese AI forces are catching up with the most advanced U.S. large models. The gap between U.S. and Chinese large models is closing, not widening.

- In terms of cost advantage, Chinese large models are only a fraction (about 5% to 10%) of U.S. large models. Despite the constraints on computational power for Chinese enterprises, DeepSeek has pioneered a unique Chinese approach to AI innovation: the best large model performance at the lowest cost. This combination is the most significant breakthrough that DeepSeek represents. From the perspective of industrial patterns, this breakthrough is truly disruptive.

- A shift in industry dynamics: DeepSeek has introduced a clearer technical roadmap, significantly reduced development and application costs, and implemented a more open-source model. These factors will fundamentally alter the economic logic of the AI industry from the previous phase. The scaling laws, which previously centered around oligopolistic players, are now transitioning to an inclusive scaling law. This new scaling law will promote the democratization of AI technology, enabling more companies and developers to participate in AI application and innovation.

Unlike the ChatGPT era, future AI industry players will not be limited to U.S. tech giants or the U.S. – China rivalry; instead, the field will expand globally. The rise of DeepSeek not only promotes the democratization of AI technology, but also brings a multiplier effect to the combination of globalization and AI technology.