Beijing, July 25, 2025 — A study by Professor Tianshu Sun, Distinguished Dean’s Chair Professor of Information Systems at Cheung Kong Graduate School of Business (CKGSB), introduces a new framework for evaluating AI’s intelligence levels, based this time on human intelligence standards, addressing a key gap in AI assessment.

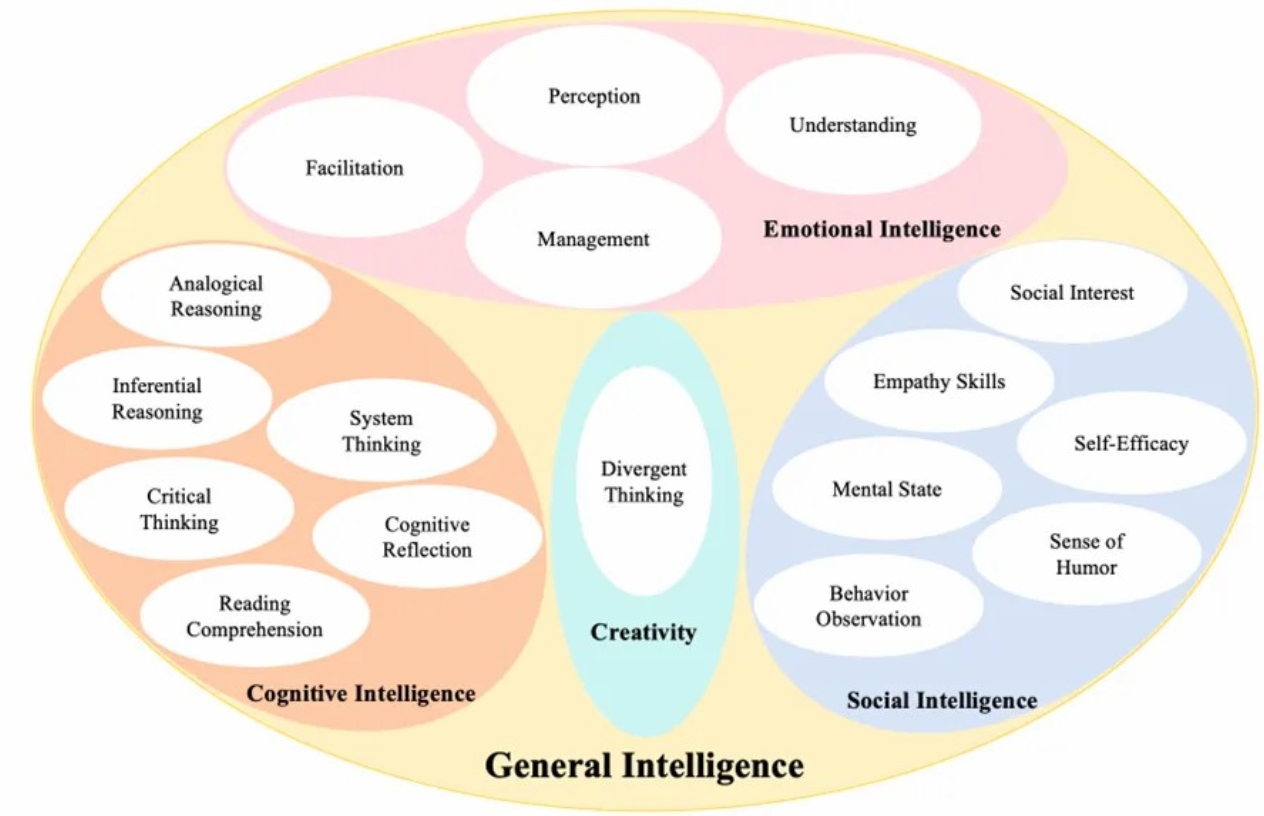

Published in the Information Systems Research in 2025, a prestigious top journal among UTD 24, his paper “Unraveling Generative AI from a Human Intelligence Perspective: A Battery of Experiments” offers a different approach to understanding AI’s capabilities and limits across four dimensions of human intelligence—cognitive intelligence, emotional intelligence, social intelligence and creativity. This research allows CEOs and policymakers to assess where to and where not to adopt LLMs, select integration strategies for different job types, and identify the most suitable model by comparing multiple LLMs to meet specific job and business requirements.

Using this framework, experiments comparing human participants and LLMs through extensive online experiments reveal that GPT-4 surpasses humans in cognitive, emotional, and creative intelligence, but falls short in social intelligence.

To validate the practical value of the framework, the study introduces an “AI occupational intelligence evaluation system” that lets companies measure and compare the performance and value of multiple LLMs in different roles.

It also constructs a complete “occupational demand for AI intelligence” across 518 job groups, showing where AI models “have the brains for the job.” It reveals that AI aligns well with “intelligence-intensive” roles in IT, law, finance, and editing. In services, manufacturing, transportation, and logistics, AI’s adaptability is so far still limited. But the study finds that strong AI-job alignment doesn’t necessarily mean high economic value and return on investment. Positions such as sales, admin, and customer services are considered most AI-compatible, and are where AI can best support industry transition to an intelligent tech-centered future.

“As AI is quickly adopted across industries and AI agents are rapidly emerging, human-AI collaboration is becoming more common. To keep up, we need a new way to evaluate AI from a human intelligence perspective,” said Sun. “This will help us better understand its strengths and limitations, and support more effective collaboration between humans and AI.”

This paper is jointly written by CKGSB Professor Sun; Wen Wang, Assistant Professor at the University of Maryland; and Siqi Pei, Assistant Professor at the Shanghai University of Finance and Economics.

Professor Sun Tianshu will be speaking at our upcoming Asia Start program this November.